How Google Glass will usher in an era of superhuman vision

November 1, 2013

[+]

Stanford professor Marc Levoy sees a combination of computational

imaging and new-form-factor camera-equipped devices that will allow for a

set of what he described as “superhero vision” capabilities, Extreme Tech reports.

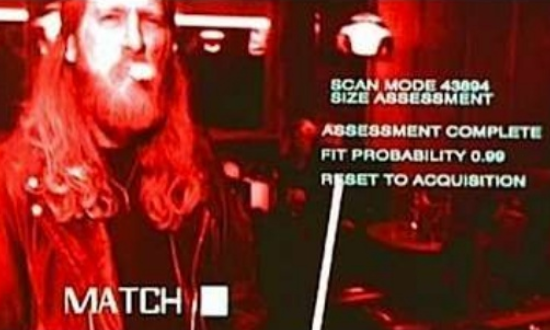

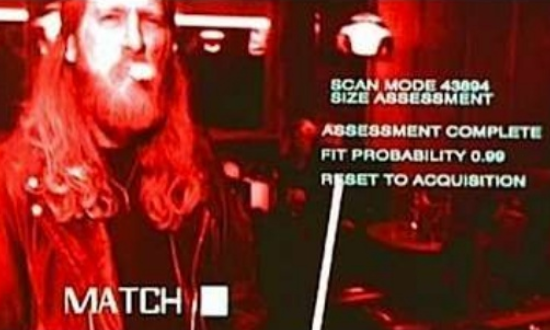

Terminator augmented-reality display (Credit: Orion Pictures)

Rapidly increasing processor power will help fuel this new world of powerful new photographic tools. Levoy, a pioneer in both computer graphics and computational imaging, noted that GPU power is growing by roughly 80% per year, while megapixels are only growing by about 20%.

That means more horsepower to process each pixel — with the available cycles increasing each year. Coupled with near-real-time multi-frame image capture, the bounds of traditional photography can even be stretched beyond the borders of a single image:

- By combining long exposures with shorter ones, high-dynamic-range (HDR) scenes can be captured, providing virtual-reality or head-up assistance to the wearer by seei;ng into the shadows or even in largely dark rooms.

- Removing objects from photographs by using bits of multiple frames in real time, taking advantage of the combination of high native frame rate and increased computational power (powerful GPUs), perhaps even as a form of augmented reality vision.

- Augmenting video clips to amplify motion — making moving objects much easier to isolate and identify (like measuring a person’s pulse with a simple camera).

- Adding a second camera, or using an array of sensors like Pelican Imaging, will make it possible to determine the depth of objects in our field of view, allowing total flexibility in managing focus.

- A wearable camera to provide an annotated view of a scene.

- Cloud-powered facial recognition to identify friends and foes alike in near-real-time.

(¯`*• Global Source and/or more resources at http://goo.gl/zvSV7 │ www.Future-Observatory.blogspot.com and on LinkeIn Group's "Becoming Aware of the Futures" at http://goo.gl/8qKBbK │ @SciCzar │ Point of Contact: www.linkedin.com/in/AndresAgostini

Washington

Washington