Does your brain see things you don’t?

Doctoral student shakes up 100 years of untested psychological theory

November 15, 2013

[+]

A new study indicates that our brains perceive objects in everyday life that we may not be consciously aware of.

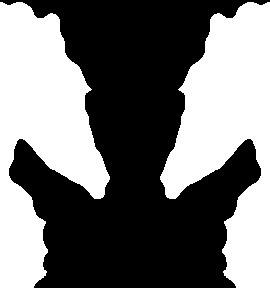

What do you see in this image?* (Credit: Jay Sanguinetti)

The finding by University of Arizona doctoral student Jay Sanguinetti challenges currently accepted models, in place for a century, about how the brain processes visual information.

Sanguinetti showed study participants a series of black silhouettes, some of which contained meaningful, real-world objects hidden in the white spaces on the outsides. He monitored subjects’ brainwaves with an electroencephalogram, or EEG, while they viewed the objects.

Study participants’ brainwaves indicated that even if a person never consciously recognized the shapes on the outside of the image, their brains still processed those shapes to the level of understanding their meaning.

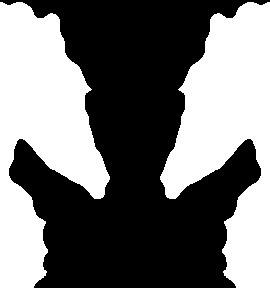

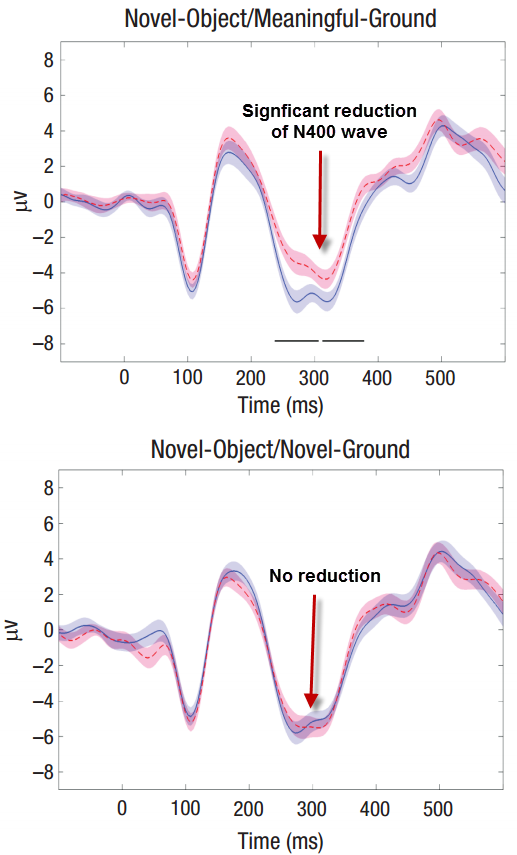

A brainwave that indicates recognition of an object

“There’s a brain signature for meaningful processing,” Sanguinetti said. A peak in the averaged brainwaves called N400 indicates that the brain has recognized an object and associated it with a particular meaning.

[+]

“It happens about 400 milliseconds after the image is shown, less

than a half a second,” said Peterson. “As one looks at brainwaves,

they’re undulating above a baseline axis and below that axis.

When

an object view is repeated, the voltage of a negative-going brainwave

known as N400 is reduced. But contrary to currently accepted perception

models, it is also reduced even when the study participants reported not

(consciously) recognizing the shape of a meaningful background object

such as the horse heads (“Novel Object/Meaningful Ground,” top graph) —

the black horizontal bars just above the abscissa indicate time periods

when tests indicated that negative-going activity was significantly

reduced for repeat relative to first presentations. However, the N400

voltage was not reduced when there was no meaningful background image (bottom graph). That suggests that their brain did recognize a shape, but didn’t forward the information to the conscious level. (Credit: Jay Sanguinetti et al./Psychological Science)

The negative ones below the axis are called N and positive ones above the axis are called P, so N400 means it’s a negative waveform that happens approximately 400 milliseconds after the image is shown.”

The presence of the N400 negative peak indicates that subjects’ brains recognize the meaning of the shapes on the outside of the figure.

“The participants in our experiments [in some cases] don’t see those shapes on the outside; nonetheless, the brain signature tells us that they have processed the meaning of those shapes,” said Peterson.

“But the brain rejects them as interpretations, and if it rejects the shapes from conscious perception, then you won’t have any awareness of them.”

“We also have novel silhouettes as experimental controls,” Sanguinetti said. “These are novel black shapes in the middle and nothing meaningful on the outside.”

The N400 waveform does not appear on the EEG of subjects when they are seeing these truly novel silhouettes, without images of any real-world objects, indicating that the brain does not recognize a meaningful object in the image.

“This is huge,” Peterson said. “We have neural evidence that the brain is processing the shape and its meaning of the hidden images in the silhouettes we showed to participants in our study.”

So why does the brain process images that are not perceived?

The finding leads to the question: why would the brain process the meaning of a shape when a person is ultimately not going to perceive it?

“Many, many theorists assume that because it takes a lot of energy for brain processing, that the brain is only going to spend time processing what you’re ultimately going to perceive,” said Sanguinetti adviser Mary Peterson, a professor of psychology and director of the UA’s Cognitive Science Program.

[+]

“But in fact the brain is deciding what you’re going to perceive, and

it’s processing all of the information and then it’s determining what’s

the best interpretation.

Davi

Vitela dons a cap used to take EEG scans of her brain activity while

she views a series of images. (Credit: Patrick McArdle/UANews)

“This is a window into what the brain is doing all the time. It’s always sifting through a variety of possibilities and finding the best interpretation for what’s out there. And the best interpretation may vary with the situation.”

Our brains may have evolved to sift through the barrage of visual input in our eyes and identify those things that are most important for us to consciously perceive, such as a threat or resources such as food, Peterson suggested.

Finding where the processing of meaning occurs

In the future, Peterson and Sanguinetti plan to look for the specific regions in the brain where the processing of meaning occurs to understand where and how this meaning is processed,” said Peterson.

Images were shown to Sanguinetti’s study participants for only 170 milliseconds, yet their brains were able to complete the complex processes necessary to interpret the meaning of the hidden objects.

[+]

“There are a lot of processes that happen in the brain to help us

interpret all the complexity that hits our eyeballs,” Sanguinetti said.

“The brain is able to process and interpret this information very

quickly.”

Jay Sanguinetti, tegestologist (credit: Jay Sanguinetti)

How this relates to the real world

Sanguinetti’s study indicates that in our everyday life, as we walk down the street, for example, our brains may recognize many meaningful objects in the visual scene, but ultimately we are aware of only a handful of those objects, said Sanguinetti.

The brain is working to provide us with the best, most useful possible interpretation of the visual world — an interpretation that does not necessarily include all the information in the visual input.

“The findings in the research also show that our brains are processing potential objects in a visual scene to much higher levels of processing than once thought,” he explained to KurzweilAI. “Our models assume that potential objects compete for visual representation. The one that wins the competition is perceived as the object, the loser is perceived as the shapeless background.

“Since we’ve shown that shapeless backgrounds are processed to the level of semantics (meaning), there might be a way to bias this processing such that hidden objects in a scene might be perceived, by tweaking the image in ways to enunciate certain objects over others. This could be useful in many applications like radiology, product design, and even art.”

The study was funded by a grant to Mary Peterson from the National Science Foundation.

* Sanguinetti showed study participants images of what appeared to be an abstract black object. Sometimes, however, there were real-world objects hidden at the borders of the black silhouette. In this image, the outlines of two seahorses can be seen in the white spaces surrounding the black object.

The study raises interesting questions, such as: does perception of objects that are not consciously perceived — but still recognized by the brain — affect our future thinking and behavior? If so, how would we detect or measure that? This could be important for evaluating the reliability and validity of our thinking and memory — for evidence in trials, for example. — Editor

Abstract of Psychological Science paper

Traditional theories of perception posit that only objects access semantics; abutting, patently shapeless grounds do not. Surprisingly, this assumption has been untested until now. In two experiments, participants classified silhouettes as depicting meaningful real-world or meaningless novel objects while event-related potentials (ERPs) were recorded. The borders of half of the novel objects suggested portions of meaningful objects on the ground side. Participants were unaware of these meaningful objects because grounds are perceived as shapeless. In Experiment 1, in which silhouettes were presented twice, N400 ERP repetition effects indicated that semantics were accessed for novel silhouettes that suggested meaningful objects in the ground and for silhouettes that depicted real-world objects, but not for novel silhouettes that did not suggest meaningful objects in the ground. In Experiment 2, repetition was manipulated via matching prime words. This experiment replicated the effect observed in Experiment 1. These experiments provide the first neurophysiological evidence that semantic access can occur for the apparently shapeless ground side of a border.

(¯`*• Global Source and/or more resources at http://goo.gl/zvSV7 │ www.Future-Observatory.blogspot.com and on LinkeIn Group's "Becoming Aware of the Futures" at http://goo.gl/8qKBbK │ @SciCzar │ Point of Contact: www.linkedin.com/in/AndresAgostini

Washington

Washington