Carnegie Mellon computer searches web 24/7 to analyze images and teach itself common sense

NEIL program labels images, learns associations with minimal help from people

November 22, 2013

NEIL leverages recent advances in computer vision that enable computer programs to identify and label objects in images, to characterize scenes and to recognize attributes, such as colors, lighting and materials, all with a minimum of human supervision. In turn, the data it generates will further enhance the ability of computers to understand the visual world.

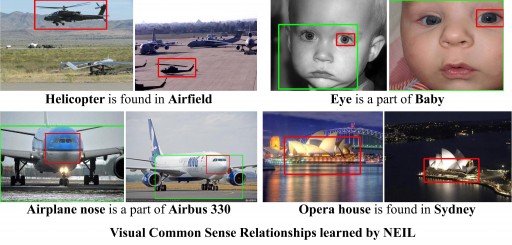

But NEIL also makes associations between these things to obtain common sense information: cars often are found on roads, buildings tend to be vertical, and ducks look sort of like geese.

“Images are the best way to learn visual properties,” said Abhinav Gupta, assistant research professor in Carnegie Mellon’s Robotics Institute. “Images also include a lot of common sense information about the world. People learn this by themselves and, with NEIL, we hope that computers will do so as well.”

Since late July, the NEIL program has analyzed three million images, identifying 1,500 types of objects in half a million images and 1,200 types of scenes in hundreds of thousands of images. It has connected the dots to learn 2,500 associations from thousands of instances.

You can view NEIL’s findings at the project website (or help train it): http://www.neil-kb.com.

World’s largest structured visual knowledge base

One motivation for the NEIL project is to create the world’s largest visual structured knowledge base, where objects, scenes, actions, attributes and contextual relationships are labeled and catalogued.

“What we have learned in the last 5-10 years of computer vision research is that the more data you have, the better computer vision becomes,” Gupta said.

Some projects, such as ImageNet and Visipedia, have tried to compile this structured data with human assistance. But the scale of the Internet is so vast — Facebook alone holds more than 200 billion images — that the only hope to analyze it all is to teach computers to do it largely by themselves.

Shrivastava said NEIL can sometimes make erroneous assumptions that compound mistakes, so people need to be part of the process. A Google Image search, for instance, might convince NEIL that “pink” is just the name of a singer, rather than a color.

“People don’t always know how or what to teach computers,” he observed. “But humans are good at telling computers when they are wrong.”

People also tell NEIL what categories of objects, scenes, etc., to search and analyze. But sometimes, what NEIL finds can surprise even the researchers. Gupta and his team had no idea that a search for F-18 would identify not only images of a fighter jet, but also of F18-class catamarans.

As its search proceeds, NEIL develops subcategories of objects — cars come in a variety of brands and models. And it begins to notice associations — that zebras tend to be found in savannahs, for instance, and that stock trading floors are typically crowded.

NEIL is computationally intensive, the research team noted. The program runs on two clusters of computers that include 200 processing cores.

Practical uses

NEIL’s knowledge can be used wherever machine perception is required (e.g., image retrieval, robotics applications, object and scene recognition, describing images, visual properties of objects and even visual surveillance,” Gupta explained to KurzweilAI.

“NEIL has analyzed more than 5 million images and built a database of 0.5 million images and 3000 relationships in 4 months. The NEIL visual knowledge base also includes visual models of concepts (e.g., car, crowded, trading floor) and relationships between concepts (e.g., cars have wheels, trading floors are crowded). These models and relationships will be made available for academic research use. We also invite academic users to submit concepts that they would like NEIL to learn and later use these models for their own research.

“Once the technology is mature (hopefully in the near future), we expect NEIL’s knowledge base to have multiple commercial applications.”

The research is supported by the Office of Naval Research and Google Inc.

Abstract of International Conference on Computer vision (ICCV) paper

NEIL (Never Ending Image Learner) is a computer program that runs 24 hours per day and 7 days per week to automatically extract visual knowledge from Internet data. NEIL uses a semi-supervised learning algorithm that jointly discovers common sense relationships (e.g., “Corolla is a kind of/looks similar to Car”,“Wheel is a part of Car”) and labels instances of the given visual categories. It is an attempt to develop the world’s largest visual structured knowledge base with minimum human labeling effort. As of 10th October 2013, NEIL has been continuously running for 2.5 months on 200 core cluster (more than 350K CPU hours) and has an ontology of 1152 object categories, 1034 scene categories and 87 attributes. During this period, NEIL has discovered more than 1700 relationships and has labeled more than 400K visual instances.

(¯`*• Global Source and/or more resources at http://goo.gl/zvSV7 │ www.Future-Observatory.blogspot.com and on LinkeIn Group's "Becoming Aware of the Futures" at http://goo.gl/8qKBbK │ @SciCzar │ Point of Contact: www.linkedin.com/in/AndresAgostini

Washington

Washington