Kinect-based program makes yoga accessible for the blind

October 20, 2013

[+]

University of Washington computer scientists have created

a software program that watches a user’s movements and gives spoken

feedback on what to change to accurately complete a yoga pose.

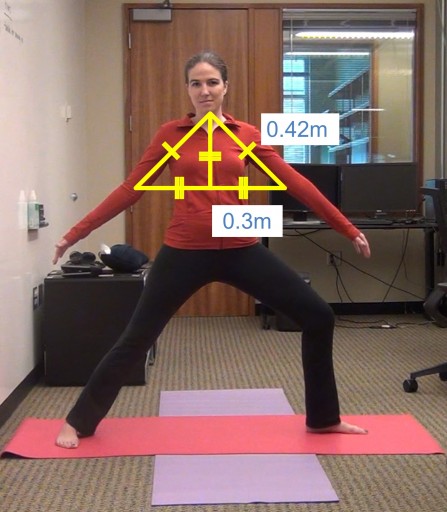

An

incorrect Warrior II yoga pose is outlined showing angles and

measurements. Using geometry, the Kinect reads the angles and responds

with a verbal command to raise the arms to the proper height. (Credit:

Kyle Rector/University of Washington)

“My hope for this technology is for people who are blind or low-vision to be able to try it out, and help give a basic understanding of yoga in a more comfortable setting,” said

The program, called Eyes-Free Yoga, uses Microsoft Kinect software to track body movements and offer auditory feedback in real time for six yoga poses, including Warrior I and II, Tree and Chair poses.

Project lead Kyle Rector, a UW doctoral student in computer science and engineering. and her collaborators published their methodology (open access) in the conference proceedings of the Association for Computing Machinery’s SIGACCESS International Conference on Computers and Accessibility, Oct. 21–23.

The software instructs the Kinect to read a user’s body angles, then gives verbal feedback on how to adjust his or her arms, legs, neck or back to complete the pose. For example, the program might say: “Rotate your shoulders left,” or “Lean sideways toward your left.”

The result is an accessible yoga “exergame” — a video game used for exercise — that allows people without sight to interact verbally with a simulated yoga instructor.

[+]

The technology uses simple geometry and the law of cosines to

calculate angles created during yoga. For example, in some poses a bent

leg must be at a 90-degree angle, while the arm spread must form a

160-degree angle. The Kinect reads the angle of the pose using cameras

and skeletal-tracking technology, then tells the user how to move to

reach the desired angle.

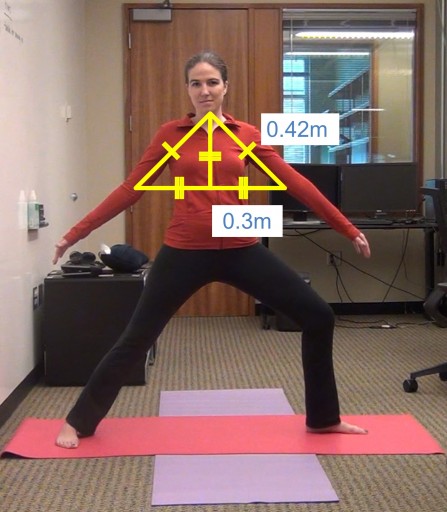

This

color-coded image shows the order of priority for correcting a person’s

alignment (credit: Kyle Rector/University of Washington)

Rector and collaborators plan to make this technology available online so users could download the program, plug in their Kinect and start doing yoga.

The research was funded by the National Science Foundation, a Kynamatrix Innovation through Collaboration grant, and the Achievement Rewards for College Scientists Foundation.

Abstract of Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility paper

People who are blind or low vision may have a harder time participating in exercise classes due to inaccessibility, travel difficulties, or lack of experience. Exergames can encourage exercise at home and help lower the barrier to trying new activities, but there are often accessibility issues since they rely on visual feedback to help align body positions. To address this, we developed Eyes-Free Yoga, an exergame using the Microsoft Kinect that acts as a yoga instructor, teaches six yoga poses, and has customized auditory-only feedback based on skeletal tracking. We ran a controlled study with 16 people who are blind or low vision to evaluate the feasibility and feedback of Eyes-Free Yoga. We found participants enjoyed the game, and the extra auditory feedback helped their understanding of each pose. The findings of this work have implications for improving auditory-only feedback and on the design of exergames using depth cameras.

(¯`*• Global Source and/or more resources at http://goo.gl/zvSV7 │ www.Future-Observatory.blogspot.com and on LinkeIn Group's "Becoming Aware of the Futures" at http://goo.gl/8qKBbK │ @SciCzar │ Point of Contact: www.linkedin.com/in/AndresAgostini

Washington

Washington