The brain’s visual data-compression algorithm

December 24, 2013

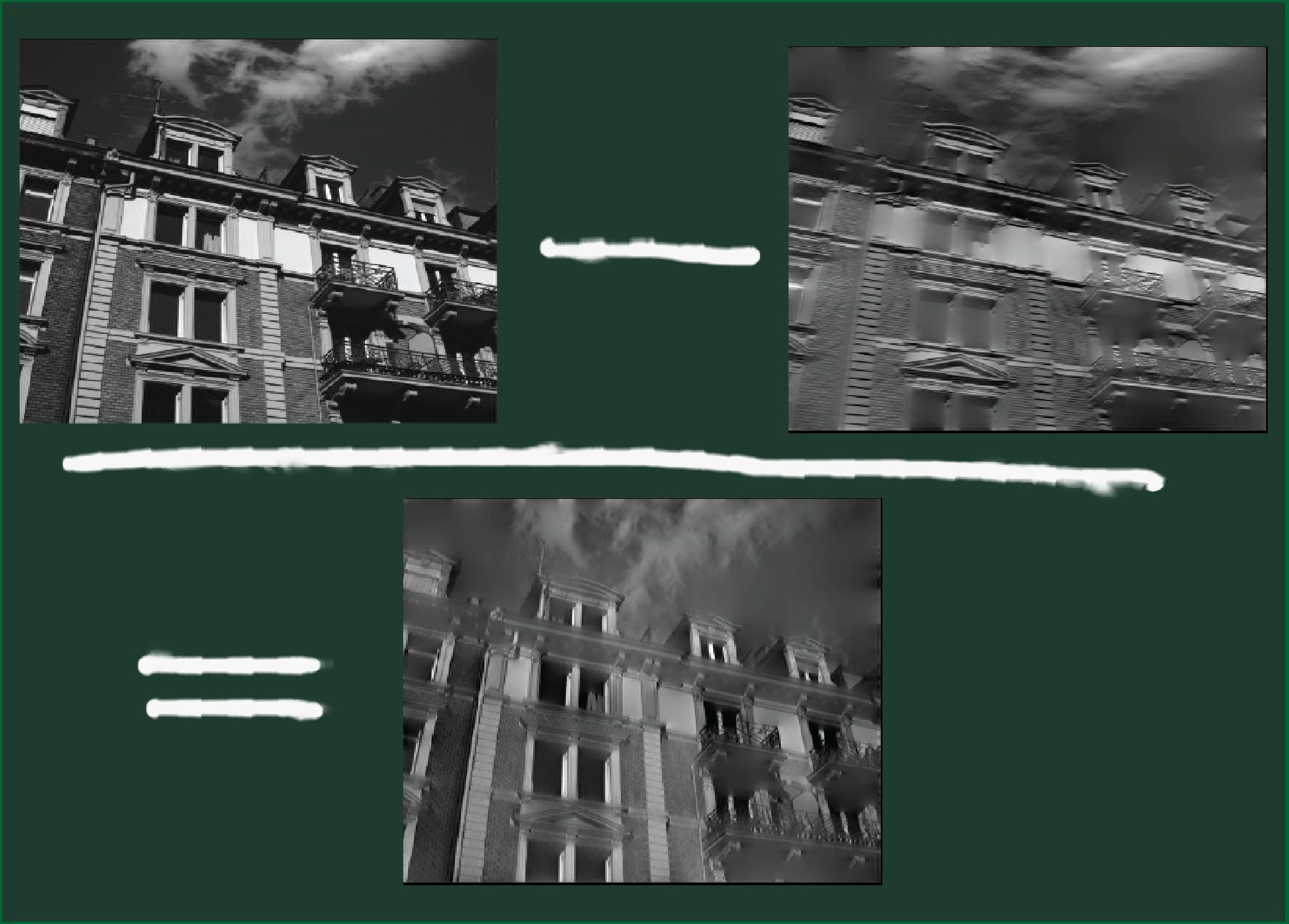

Data

compression in the brain: When the primary visual cortex processes

sequences of complete images and images with missing elements — here

vertical contours — it “subtracts” the images from each other (the brain

computes the differences between the images). Under certain

circumstances, the neurons forward these image differences (bottom)

rather than the entire image information (upper left). (Credit: RUB,

Jancke)

“We intuitively assume that our visual system generates a continuous stream of images, just like a video camera,” said Dr. Dirk Jancke from the Institute for Neural Computation at Ruhr University.

“However, we have now demonstrated that the visual cortex suppresses redundant information and saves energy by frequently forwarding image differences,” similar to methods used for video data compression in communication technology. The study was published in Cerebral Cortex (open access).

Using recordings in cat visual cortex, Jancke and associates recorded the neurons’ responses to natural image sequences such as vegetation, landscapes, and buildings. They created two versions of the images: a complete one, and one in which they had systematically removed vertical or horizontal contours.

If these individual images were presented at 33Hz (30 milliseconds per image), the neurons represented complete image information. But at 10Hz (100 milliseconds), the neurons represented only those elements that were new or missing, that is, image differences.

To monitor the dynamics of neuronal activities in the brain in the millisecond range, the scientists used voltage-dependent dyes. Those substances fluoresce when neurons receive electrical impulses and become active, measured across a surface of several square millimeters. The result is a temporally and spatially precise record of transmission processes within the neuronal network.

Abstract of Cerebral Cortex paper

The visual system is confronted with rapidly changing stimuli in everyday life. It is not well understood how information in such a stream of input is updated within the brain. We performed voltage-sensitive dye imaging across the primary visual cortex (V1) to capture responses to sequences of natural scene contours. We presented vertically and horizontally filtered natural images, and their superpositions, at 10 or 33 Hz. At low frequency, the encoding was found to represent not the currently presented images, but differences in orientation between consecutive images. This was in sharp contrast to more rapid sequences for which we found an ongoing representation of current input, consistent with earlier studies. Our finding that for slower image sequences, V1 does no longer report actual features but represents their relative difference in time counteracts the view that the first cortical processing stage must always transfer complete information. Instead, we show its capacities for change detection with a new emphasis on the role of automatic computation evolving in the 100-ms range, inevitably affecting information transmission further downstream.

(¯`*• Global Source and/or more resources at http://goo.gl/zvSV7 │ www.Future-Observatory.blogspot.com and on LinkeIn Group's "Becoming Aware of the Futures" at http://goo.gl/8qKBbK │ @SciCzar │ Point of Contact: www.linkedin.com/in/AndresAgostini

Washington

Washington